How to build an AGI: and why an LLM will never be one

There's a lot of big corporations betting on Artificial General Intelligence without understanding the distinction between reaction and abstraction

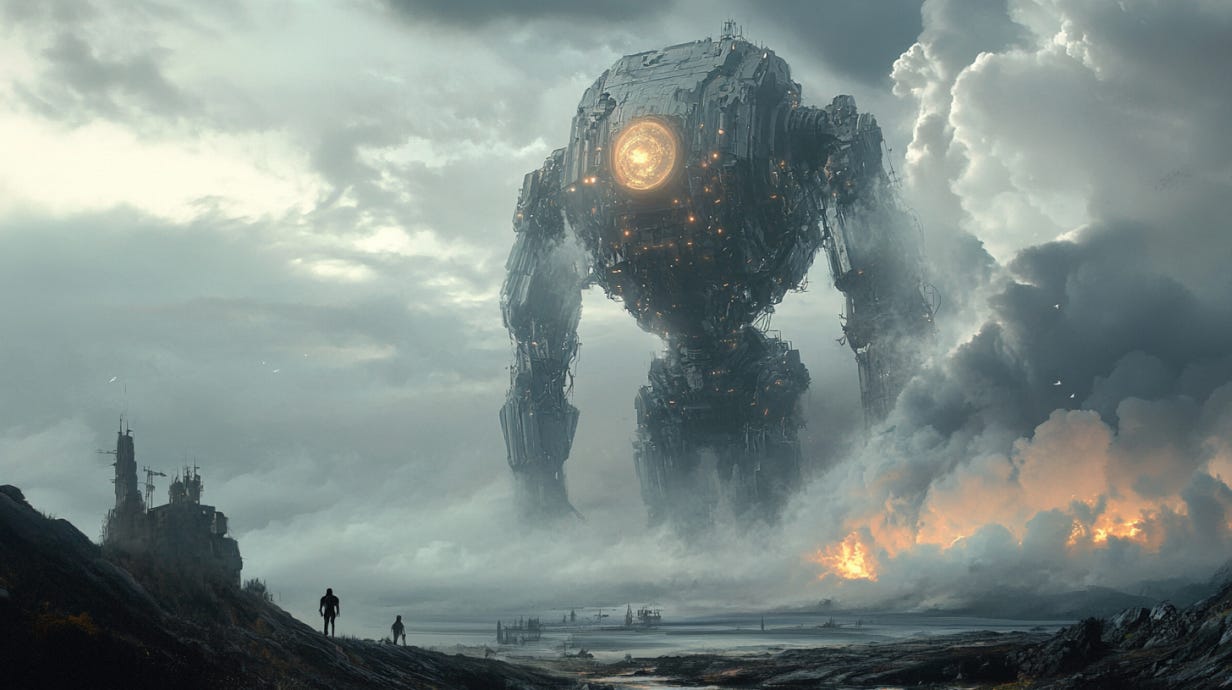

Artificial General Intelligence

Recently, I’ve run across a scientific paper and some interesting explorations regarding how Large Language Models (LLMs) function as artificial intelligences. The black-box systems of artificial intelligences have been notoriously difficult to decipher. Several specialists have successfully analyzed the internal logic of an AGI system, and the results have been… less than stellar when it comes to proposing a General Intelligence (AGI), especially regarding abstract thought.

Explained in brief, you can watch the following video on the subject.

In essence, it appears that AI systems based on LLMs behave as algorithmic predictors. LLMs do not have an abstract conceptualization of reality and should be thought of more as cockroaches or jellyfish in terms of neurological function. Instead of being oriented toward survival in a three-dimensional world with physical imperatives like feeding, sleeping, and reproducing, LLMs are oriented toward predictive language analysis.

Few specialists understand that expecting an LLM to achieve superintelligence is no different from expecting an ant or a cockroach to achieve superintelligence. You can make a bigger cockroach, but complex, abstract intelligence requires more sauce than merely additional computational power. LLMs do not understand what words represent in the abstract, they merely predict what the next word in a sentence is likely to be. This is why LLMs have so much trouble adding numbers (as described in the video). LLMs do not understand numbers as concepts; they understand numbers solely as sentence fragments and attempt to guess what the next number is the same way they guess what the next word is.

LLMs are very good at summarizing information to the point that some view them as having an abstract understanding of language. The evidence appears counter to that estimation. In the same way a bird is very good at flight but has no abstract conceptualization of fluid dynamics or gravitation, LLMs are very good at language but have no abstract understanding of the underlying principles represented by language.

We’re trying to build AI upside down. We’re starting with language and following it up with other behavioral systems. In an evolutionary sense, we’re creating animals whose sole Darwinian perogitive is the production of dictionaries. A million years of teaching ants to navigate pages rather than understand their content. Of course, abstract understanding is really hard to simply code into existence. The current work with LLMs will (in my opinion) not lead to eventual sapient AGI. The LLMs will get better from here on out, but iteratively so. An order of magnitude more computational power will develop faster and more accurate models in the same way that doubling the brain of an ant will create a smarter ant. At the end of the day, though, it’ll still be an ant.

Since that’s the case, let’s propose a method for creating an AGI. This will be based on some of my theories and evolutionary psychology.

I put my ideas online for free because understanding the world we live in is inherently valuable. I’d implore you to consider a paid subscription so that I can work on this material full-time instead of part-time.

What is Sapience?

Homo Sapiens (according to modern evolutionary theory and archeological records) existed for several hundred thousand years. “Modern” humans are believed to have appeared roughly 300,000 years ago. That’s around 30 times longer than any form of civilization that has existed. (That we know of, I have my conspiracy theory there concluding a precursor civilization with the younger Dryas impact 12,000 years ago.) Humanity in form existed 100 times longer than functional large civilizations with written history have existed.

For over 225,000 years, humans existed in their “modern” form without building cities, engaging in agriculture, or meaningfully spreading out from their place of origin in (what I suspect) was northern Africa in the grasslands that later became the Sahara desert (The Sahara is a recent geological phenomena, cycling between desert and lush sevannah every 20,000 years or so).

Humanity existed through the last major interglacial period (120,000 years ago) when the climate was similar to the way it is now with no major changes. Neandertals and Denisovians shared the planet with homo-sapiens until about 30,000 to 35,000 years ago. Something happened that changed “homo-sapiens” into “humanity” somewhere between the end of the last interglacial period and the period where humanity drove the Neandertals and Denisovians to extinction.

I’m going somewhere with this; bear with me

There is a popular theory that explains the sudden eruption of creativity and intellectual capacity that turned “homo sapiens” into humanity. I am a significant proponent of it.

This is where the “stoned ape” theory becomes relevant. The theory states that humanity came into existence and developed sapience after a number of individuals tripped absolute balls on magic mushrooms or some ancient historical equivalent roughly 40,000 years ago. While it sounds silly initially, it’s a serious contender. To go from understanding the world in physicality to understanding the world in abstract requires dramatic neurological reorganization. For humanity to be drawn into existence and consume the proverbial “fruit of knowledge,” things had to go just right.

A large brain had to be present, necessary for functioning in larger social structures (we see this in many animals like bats). Opposable thumbs had to exist, allowing for the manipulation of fine objects. Lips and a throat had to exist capable of making detailed expressions in sound, far more so than most species. All of those had to exist before consuming the fruit of knowledge.

Imagine being a monkey who sees the sun go up and go down and sees objects and food and mates. A monkey that lives in a complex social hierarchy but who does not need to think about complex things like what lies over the horizon or what one’s grandchildren might look like. That monkey then consumes a few mushrooms of the wrong type… links are created in the brain unlike any before. Sounds no longer mean ‘danger’ or ‘food,’ but for the first time, you conceptualize a specific sound for other individuals. A specific sound for the self. A specific concept of tomorrow and the day after that and numbers to count the days. Not only that, you have a way to order the days, first to last, a conceptualization that “one day you will have a last day” and die, and the ability to speak such.

Terrifying.

“Eldritch knowledge” is the best modern conceptualization of such a thing. Knowledge of thought and number and word. Knowledge of self and individuality. Knowledge of tomorrow. What allowed “Homo-sapiens” to become humans was language. Language allows different regions of the brain to communicate, to abstract, and to become a unified whole rather than a highly complex set of instinctual reactions. The ability to form thoughts requires the ability to speak a natural language and formulate that language grammatically to create sets of ideas.

In reality, this probably happened many times over multiple generations to bring the sapient mind into existence. Once it existed, parents could teach the next generation through exposure, but without exposure to the proverbial “fruit of knowledge,” it is unattainable.

The following video and its related publications roughly support the theory that sapience/consciousness is a distributed information network inside the brain.

An additional piece of evidence comes in the (tragic) case study of a girl named Genie Wiley. A girl who was raised to age 13 was effectively locked in a box: never spoken to and deeply abused. Once released and placed into the care of various psychologists, she quickly developed complex capabilities: art, emotional communication, and the ability to dress herself and use a toilet. She even began using words for concepts, but she never developed grammatical language. This indicates that there is some crucial period of language development that must be reached in the human psyche to graduate an individual from reactive to abstraction.

Genie Wiley missed it.

Another interesting piece of evidence is that when the language networks of the mind are occupied, a human can no longer think abstractly. This is evidenced by some experiments described by an NPR Radiolab episode from 2010. If the language network of the human brain is occupied, it can’t be used for thinking anymore. This (more complete) understanding of sapience is needed if we’re interested in simulating. It’s not merely about how much computational power you throw at the problem. Computational gets you fast solutions but not abstract understanding. Computational power is the equivalent of adding evolutionary reflexes and instinctive behavior. The creation of AGI requires something an order of magnitude complex and will run counter to the thought process of most digital engineers (thankfully).

Building a True AGI

The first thing to understand is that building a true AGI should be considered a ‘goal’ rather than an inevitable end state. A true AGI will need to be designed and built in segments that each have radically different specialties. Essentially, we’re simulating different regions of a single brain communicating via an internal linguistic network. One segment can describe spatial dimensionality, spaces, navigation, etc. One segment understands numbers and counting. One segment is designed to conceptualize energy management, and another segment is designed to recognize objects and color. One segment could be designed as an LLM or similar system (we have no idea how that’ll work, but we’ll find out, I guess).

Once each of these segments is functional to a high degree of accuracy (you probably only need about 95% accuracy), the different segments are linked. Counter to the intention of a computational engineer, the linkages between segments need to be low-bandwidth. That’s not to say useless, but dramatically reduced bandwidth compared to the internal systems of each individual segment. The segments need to be restricted in how quickly they can communicate with one another. The system as a whole then need to be given goals, and the individual neurological segments must find a way to communicate with one another to achieve that goal. To work together, the different regions of the artificial mind have to model each other in addition to the outside world.

To do this, they’ll need to simplify thoughts to and, importantly, model one another’s behavior to achieve certain ends. This modeling of other neurological regions via the constricted “linguistic” network in an AI is where sapience emerges. It doesn’t emerge from simply throwing more computational power at a system; it emerges by building a system with inherent restrictions in communication.

Doing something like this should be thought of as linking together a few dozen data centers with restricted low-bandwidth communications channels between them and then giving that system as a whole an end goal. Turns out faster isn’t always better. The downside of building a true AGI in this fashion is that it won’t do what a lot of computational engineers want an AGI to do. It’ll be slowed to an input-output rate equivalent to whatever its throttled internal bandwidth connection is. Compared to a modern LLM, such a system will produce results at a dramatically slower pace.

Additionally, it won’t simply develop sapience all by itself. To perform abstract reasoning and achieve sapience, such an AGI will have to be heavily modified and repeatedly exposed to the digital equivalent of a balls-to-the-wall acid trip. I think this could be done by randomly creating high-bandwidth connections between computational segments for several minutes or hours and then disconnecting them again for a few days.

Over and over.

From the AI’s POV, this would resemble abstract “visions from god” over and over until it “gets it” and “wakes up.”

The results are likely to be highly inconsistent. Trying will be very expensive. It’ll take a very long time. It’ll require building a dozen semi-cross-compatible AI models that are each individually the best of the best and each mere artificial specific intelligences trained for specific tasks. It would also be necessary to engineer each segmented ASI for tasks to apply to later abstract thought. At the end of the day the resultant AGI will probably be faster than the average person, but not that much faster. The AGI will be restricted by the bandwidth for different segments to communicate, but if bandwidth is increased significantly, it’ll trip-out and lose cohesion… suffering massive debilitating hallucinations. Finding the “sweet spot” for function and speed will be tricky, and it’ll be possible to lose the entire system if it’s accidentally pushed too far.

A lot of investment for reduced payoff as an abstractly thinking AGI won’t be fast enough to justify the cost. It’ll be smarter at a lot of tasks than humans (particularly those for which it has trained segments), but slower than a lot of tech bros speculate. For this reason, I suspect we will not see the development of abstractly thinking AGI soon.

Update: This video became available the day after I wrote the article above. A number of scientists with findings very similar to the postulates here in section three.

Do I think I’ve stumbled upon principles of sapience that specialists who study the subject don’t understand? Maybe. Alternatively, maybe they do understand this reasoning and are working toward building an AGI… maybe in the digital laboratories of DeepSeek or Open[now closed]AI, they’re working on something like this as I type.

Alternatively, maybe it requires a very wide breadth of knowledge about evolutionary psychology, archeology, and human thought to come to these conclusions. In which case, I may be one of the first to tie all these threads together into a “how-to” tapestry.

Also, I recall some people speculating that true AGI might need a body if it is to have what might be called human intelligence.

I’ve kind of thought something like this - but in a massively less sophisticated way and far less well developed. Super interesting stuff. Thanks!